[ad_1]

Can a computer be programmed to simulate a brain? It’s a question mathematicians, theoreticians and experimentalists have long been asking — whether spurred by a desire to create artificial intelligence (AI) or by the idea that a complex system such as the brain can be understood only when mathematics or a computer can reproduce its behaviour. To try to answer it, investigators have been developing simplified models of brain neural networks since the 1940s1. In fact, today’s explosion in machine learning can be traced back to early work inspired by biological systems.

However, the fruits of these efforts are now enabling investigators to ask a slightly different question: could machine learning be used to build computational models that simulate the activity of brains?

At the heart of these developments is a growing body of data on brains. Starting in the 1970s, but more intensively since the mid-2000s, neuroscientists have been producing connectomes — maps of the connectivity and morphology of neurons that capture a static representation of a brain at a particular moment. Alongside such advances have been improvements in researchers’ abilities to make functional recordings, which measure neural activity over time at the resolution of a single cell. Meanwhile the field of transcriptomics is enabling investigators to measure the gene activity in a tissue sample, and even to map when and where that activity is occurring.

So far, few efforts have been made to connect these different data sources or collect them simultaneously from the whole brain of the same specimen. But as the level of detail, size and number of data sets increases, particularly for the brains of relatively simple model organisms, machine-learning systems are making a new approach to brain modelling feasible. This involves training AI programs on connectomes and other data to reproduce the neural activity you would expect to find in biological systems.

Several challenges will need to be addressed for computational neuroscientists and others to start using machine learning to build simulations of entire brains. But a hybrid approach that combines information from conventional brain-modelling techniques with machine-learning systems that are trained on diverse data sets could make the whole endeavour both more rigorous and more informative.

Brain mapping

The quest to map a brain began nearly half a century ago, with a painstaking 15-year effort in the roundworm Caenorhabditis elegans2. Over the past two decades, developments in automated tissue sectioning and imaging have made it much easier for researchers to obtain anatomical data — while advances in computing and automated-image analysis have transformed the analysis of these data sets2.

Connectomes have now been produced for the entire brain of C. elegans3, larval4 and adult5 Drosophila melanogaster flies, and for tiny portions of the mouse and human brain (one thousandth and one millionth respectively)2.

This is the largest map of the human brain ever made

The anatomical maps produced so far have major holes. Imaging methods are not yet able to map electrical connections at scale alongside the chemical synaptic ones. Researchers have focused mainly on neurons even though non-neuronal glial cells, which provide support to neurons, seem to play a crucial part in the flow of information through nervous systems6. And much remains unknown about what genes are expressed and what proteins are present in the neurons and other cells being mapped.

Still, such maps are already yielding insights. In D. melanogaster, for example, connectomics has enabled investigators to identify the mechanisms behind the neural circuits responsible for behaviours such as aggression7. Brain mapping has also revealed how information is computed within the circuits responsible for the flies knowing where they are and how they can get from one place to another8. In zebrafish (Danio rerio) larvae, connectomics has helped to uncover the workings of the synaptic circuitry underlying the classification of odours9, the control of the position and movement of the eyeball10 and navigation11.

Efforts that might ultimately lead to a whole mouse brain connectome are under way — although using current approaches, this would probably take a decade or more. A mouse brain is almost 1,000 times bigger than the brain of D. melanogaster, which consists of roughly 150,000 neurons.

Alongside all this progress in connectomics, investigators have been capturing patterns of gene expression with increasing levels of accuracy and specificity using single-cell and spatial transcriptomics. Various technologies are also allowing researchers to make recordings of neural activity across entire brains in vertebrates for hours at a time. In the case of the larval zebrafish brain, that means making recordings across nearly 100,000 neurons12. These technologies include proteins with fluorescent properties that change in response to shifts in voltage or calcium levels, and microscopy techniques that can image living brains in 3D at the resolution of a single cell. (Recordings of neural activity made in this way provide a less accurate picture than electrophysiology recordings, but a much better one than non-invasive methods such as functional magnetic resonance imaging.)

Maths and physics

When trying to model patterns of brain activity, scientists have mainly used a physics-based approach. This entails generating simulations of nervous systems or portions of nervous systems using mathematical descriptions of the behaviour of real neurons, or of parts of real nervous systems. It also entails making informed guesses about aspects of the circuit, such as the network connectivity, that have not yet been verified by observations.

In some cases, the guesswork has been extensive (see ‘Mystery models’). But in others, anatomical maps at the resolution of single cells and individual synapses have helped researchers to refute and generate hypotheses4.

Neuroscientists have been refining theoretical descriptions of the circuit that enables D. melanogaster to compute motion for around seven decades. Since it was completed in 201313, the motion-detection-circuit connectome, along with subsequent larger fly connectomes, has provided a detailed circuit diagram that has favoured some hypotheses about how the circuit works over others.

Yet data collected from real neural networks have also highlighted the limits of an anatomy-driven approach.

Gigantic map of fly brain is a first for a complex animal

A neural-circuit model completed in the 1990s, for example, contained a detailed analysis of the connectivity and physiology of the roughly 30 neurons comprising the crab (Cancer borealis) stomatogastric ganglion — a structure that controls the animal’s stomach movements14. By measuring the activity of the neurons in various situations, researchers discovered that even for a relatively small collection of neurons, seemingly subtle changes, such as the introduction of a neuromodulator, a substance that alters properties of neurons and synapses, completely changes the circuit’s behaviour. This suggests that even when connectomes and other rich data sets are used to guide and constrain hypotheses about neural circuits, today’s data might be insufficiently detailed for modellers to be able to capture what is going on in biological systems15.

This is an area in which machine learning could provide a way forward.

Guided by connectomic and other data to optimize thousands or even billions of parameters, machine-learning models could be trained to produce neural-network behaviour that is consistent with the behaviour of real neural networks — measured using cellular-resolution functional recordings.

Such machine-learning models could combine information from conventional brain-modelling techniques, such as the Hodgkin–Huxley model, which describes how action potentials (a change in voltage across a membrane) in neurons are initiated and propagated, with parameters that are optimized using connectivity maps, functional-activity recordings or other data sets obtained for entire brains. Or machine-learning models could comprise ‘black box’ architectures that contain little explicitly specified biological knowledge but billions or hundreds of billions of parameters, all empirically optimized.

Researchers could evaluate such models, for instance, by comparing their predictions about the neural activity of a system with recordings from the actual biological system. Crucially, they would assess how the model’s predictions compare when the machine-learning program is given data that it wasn’t trained on — as standard practice in the evaluation of machine-learning systems.

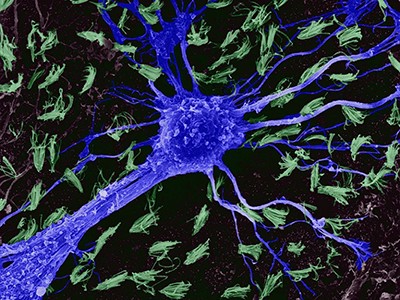

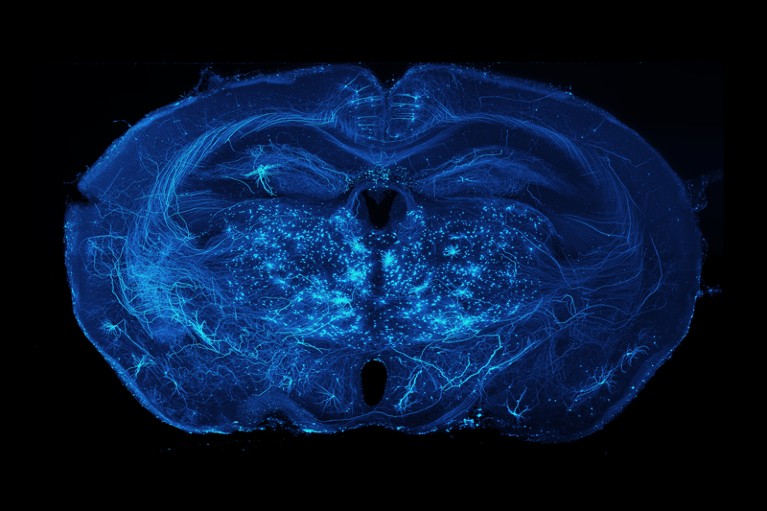

Axonal projections of neurons in a mouse brain.Credit: Adam Glaser, Jayaram Chandrashekar, Karel Svoboda, Allen Institute for Neural Dynamics

This approach would make brain modelling that encompasses thousands or more neurons more rigorous. Investigators would be able to assess, for instance, whether simpler models that are easier to compute do a better job of simulating neural networks than do more complex ones that are fed more detailed biophysical information, or vice versa.

Machine learning is already being harnessed in this way to improve understanding of other hugely complex systems. Since the 1950s, for example, weather-prediction systems have generally relied on carefully constructed mathematical models of meteorological phenomena, with modern systems resulting from iterative refinements of such models by hundreds of researchers. Yet, over the past five years or so, researchers have developed several weather-prediction systems using machine learning. These contain fewer assumptions in relation to how pressure gradients drive changes in wind velocity, for example, and how that in turn moves moisture through the atmosphere. Instead, millions of parameters are optimized by machine learning to produce simulated weather behaviour that is consistent with databases of past weather patterns16.

This way of doing things does present some challenges. Even if a model makes accurate predictions, it can be difficult to explain how it does so. Also, models are often unable to make predictions about scenarios that were not included in the data they were trained on. A weather model trained to make predictions for the days ahead has trouble extrapolating that forecast weeks or months into the future. But in some cases — for predictions of rainfall over the next several hours — machine-learning approaches are already outperforming classical ones17. Machine-learning models offer practical advantages, too; they use simpler underlying code and scientists with less specialist meteorological knowledge can use them.

On the one hand, for brain modelling, this kind of approach could help to fill in some of the gaps in current data sets and reduce the need for ever-more detailed measurements of individual biological components, such as single neurons. On the other hand, as more comprehensive data sets become available, it would be straightforward to incorporate the data into the models.

Think bigger

To pursue this idea, several challenges will need to be addressed.

Machine-learning programs will only ever be as good as the data used to train and evaluate them. Neuroscientists should therefore aim to acquire data sets from the whole brain of specimens — even from the entire body, should that become more feasible. Although it is easier to collect data from portions of brains, modelling a highly interconnected system such as a neural network using machine learning is much less likely to generate useful information if many parts of the system are absent from the underlying data.

Researchers should also strive to obtain anatomical maps of neural connections and functional recordings (and perhaps, in the future, maps of gene expression) from whole brains of the same specimen. Currently, any one group tends to focus on obtaining only one of these — not on acquiring both simultaneously.

How the world’s biggest brain maps could transform neuroscience

With only 302 neurons, the C. elegans nervous system might be sufficiently hard-wired for researchers to be able to assume that a connectivity map obtained from one specimen would be the same for any other — although some studies suggest otherwise18. But for larger nervous systems, such as those of D. melanogaster and zebrafish larvae, connectome variability between specimens is significant enough that brain models should be trained on structure and function data acquired from the same specimen.

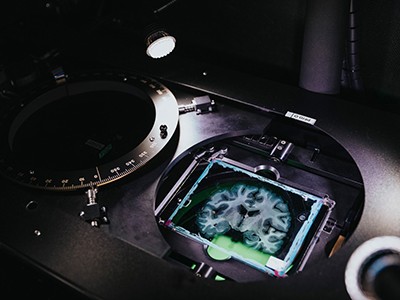

Currently, this can be achieved only in two common model organisms. The bodies of C. elegans and larval zebrafish are transparent, which means researchers can make functional recordings across the organisms’ entire brains and pinpoint activity to individual neurons. Immediately after such recordings are made, the animal can be killed, embedded in resin and sectioned, and anatomical measurements of the neural connections mapped. In the future, however, researchers could expand the set of organisms for which such combined data acquisitions are possible — for instance, by developing new non-invasive ways to record neural activity at high resolution, perhaps using ultrasound.

Obtaining such multimodal data sets in the same specimen will require extensive collaboration between researchers, investment in big-team science and increased funding-agency support for more holistic endeavours19. But there are precedents for this type of approach, such as the US Intelligence Advanced Research Projects Activity’s MICrONS project, which between 2016 and 2021 obtained functional and anatomical data for one cubic millimetre of mouse brain.

Besides acquiring these data, neuroscientists would need to agree on the key modelling targets and the quantitative metrics by which to measure progress. Should a model aim to predict the behaviour of a single neuron on the basis of a past state or of an entire brain? Should the activity of an individual neuron be the key metric, or should it be the percentage of hundreds of thousands of neurons that are active? Likewise, what constitutes an accurate reproduction of the neural activity seen in a biological system? Formal, agreed benchmarks will be crucial to comparing modelling approaches and tracking progress over time.

Lastly, to open up brain-modelling challenges to diverse communities, including computational neuroscientists and specialists in machine learning, investigators would need to articulate to the broader scientific community what modelling tasks are the highest priority and which metrics should be used to evaluate a model’s performance. WeatherBench, an online platform that provides a framework for evaluating and comparing weather forecasting models, provides a useful template16.

Recapitulating complexity

Some will question — and rightly so — whether a machine-learning approach to brain modelling will be scientifically useful. Could the problem of trying to understand how brains work simply be traded for the problem of trying to understand how a large artificial network works?

Yet, the use of a similar approach in a branch of neuroscience concerned with establishing how sensory stimuli (for example, sights and smells) are processed and encoded by the brain is encouraging. Researchers are increasingly using classically modelled neural networks, in which some of the biological details are specified, in combination with machine-learning systems. The latter are trained on massive visual or audio data sets to reproduce the visual or auditory capabilities of nervous systems, such as image recognition. The resulting networks demonstrate surprising similarities to their biological counterparts, but are easier to analyse and interrogate than the real neural networks.

For now, perhaps it’s enough to ask whether the data from current brain mapping and other efforts can train machine-learning models to reproduce neural activity that corresponds to what would be seen in biological systems. Here, even failure would be interesting — a signal that mapping efforts must go even deeper.

Source link